// On Commenting

For most of my adult life (with some periods here and there doing other things), I’ve earned my living by writing code. But whatever career success I’ve had, I attribute in large part to my regular English writing skills. This includes not just the squishy “communication” skills that all knowledge workers are gamely encouraged to cultivate, but the most fundamental kind of technical writing: adding comments to the code I write.

Despite the vocal disdain for the humanities spouted by certain tech leaders (“shape rotators” vs “wordcels,” anyone?), programming languages aren’t called languages for nothing. They have syntax and grammar, they have mechanisms for assigning words to abstract concepts, they borrow heavily from English sentence structure — but they also perform a similar function to natural language, which is to allow humans to set down their ideas and intentions in writing in a way that’s communicable to other humans (for most modern programming languages, making it communicable to machines requires a few extra steps).

Where they differ from natural language: a text written in a programming language must, ultimately, be translatable to sequences of machine operations, which makes their expressive potential necessarily much more limited. In fact, their range of expression is something studied and finitely bounded: if a programming language is capable of expressing all the tasks that can be performed by a Turing Machine (a kind of universal model for the basic elements of mechanical computing), it is said to be Turing-complete. In theory, a program written in any Turing-complete language could be translated to any of the others.

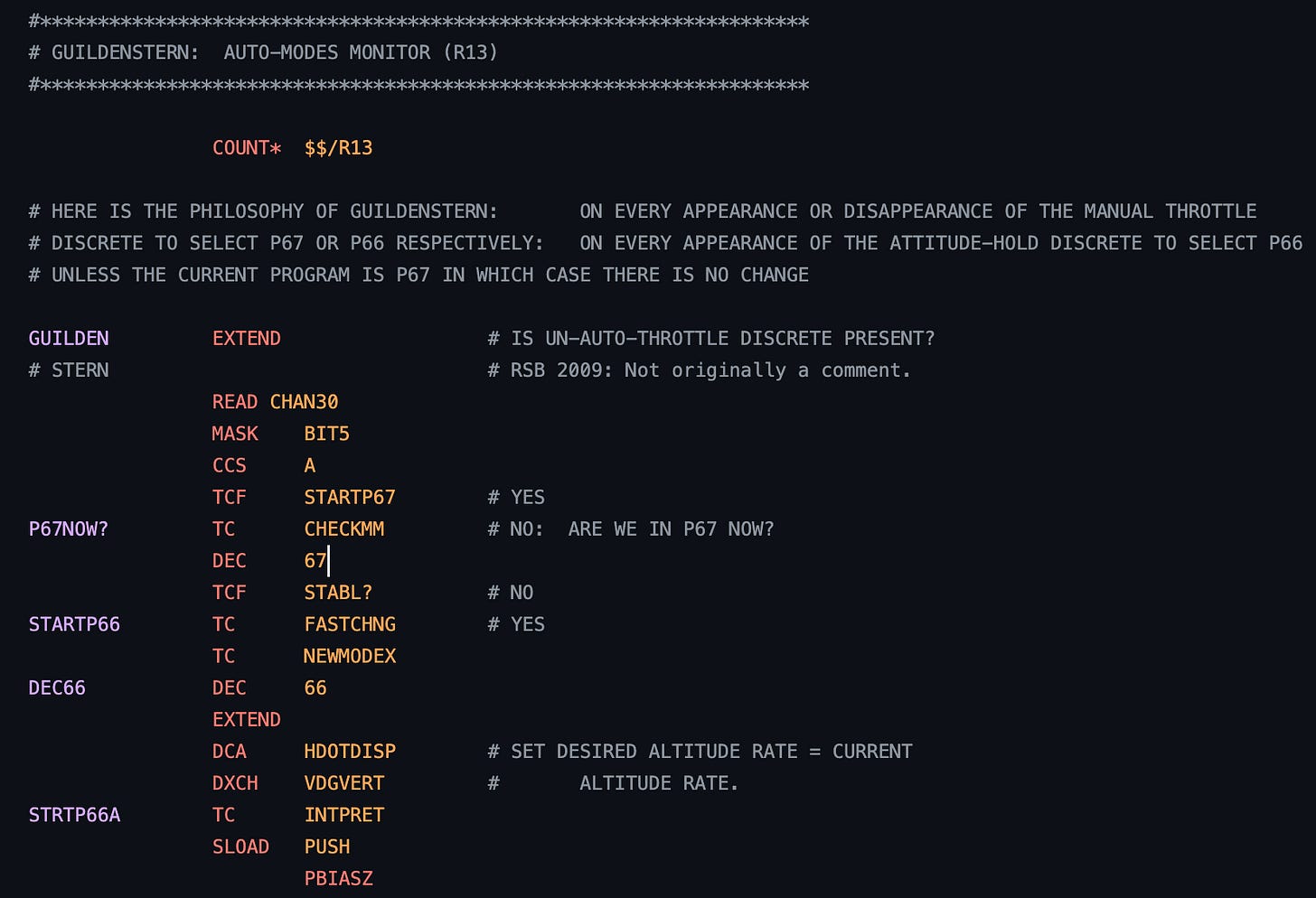

Every professional programming language has a mechanism for the code’s author to add notes, in whatever human language they choose, to help make the code more intelligible. Many early programming languages were so inscrutable that comments were absolutely necessary; this is less the case now. Some historically interesting source code (most notably for Apollo 11) has been published online so others can read, and often half the pleasure is in the comments: interspersed with the borderline-unreadable machine instructions are notes, mathematical explanations, and occasional references or jokes.

The code, then, is the primary text, and the comments are a metatext, ideally aiding in interpretation by translating, clarifying intent, and adding context. Of course, a gap can open up between the text and the metatext: the code is (ideally) unambiguous and deterministic, while the metatextual layer introduces elements that are untranslatable to the workings of a Turing machine.

When I was in school, it was taken as a given that thoroughly “commenting” the code you write is good practice and to be encouraged, and I have always followed suit. The truth is that I like writing comments, for the same reasons that I like writing this newsletter: because the process of setting my thoughts down as clearly and expressively as I can both satisfies me personally and helps me think better. Google was a particularly good place for a natural commenter to work. Its engineering culture has a history of mandating thorough and voluminous commenting. The style guide even recommends that comments be written in complete sentences with proper capitalization and punctuation, including a working understanding of commas vs. semicolons. It meant that I was rarely in the dark when reading unfamiliar code, and less likely to get tripped up by wrong assumptions. And I learned so much: about the routing engine, about traffic patterns, about random geographical facts.

However, I found out later that this attitude isn’t common everywhere. One of my culture shocks upon changing workplaces was the devaluing, to the point of outright hostility, of written comments in code. Browsing the code base, I found that large reams of essential functionality were left uncommented, leaving me to puzzle it out on my own. When I added comments in my own code, reviewers would sometimes ask me to take them out. Probing a little more, the ostensible objections were mostly about the potential gap in meaning between the text and the metatext: the code is truth, the comments may lie. Comments get outdated, and they can be misleading, the engineers said; often they confuse rather than clarify. And, if code is written clearly enough, it is self-explanatory: the ideal state is one where the code is so simply and elegantly written that commentary is superfluous.

Of course, there’s also a little bit of macho posturing underneath all this. A real engineer shouldn’t need comments; they should be smart enough to figure out how everything works from the code alone. Sometimes I also wonder if there’s an aesthetic objection: code is clean, human language is messy; comments not only add visual clutter but are fundamentally inelegant, like a dense column of smeared newsprint next to a haiku.

The trouble with the “code is pure, comments are pollution” attitude is that code is not like the laws of physics, as much as software engineers, in their grandiosity, might wish it to be so. The bits in a computer chip only carry meaning in relation to some human-defined task. From reading a piece of code, we can derive with some certainty what it does — how it changes the state of some system from A to B — but without the context of human intention, knowledge of what it’s for, it’s impossible to say whether it’s right or wrong. A bug is defined only by the gap between the intention and the result; it is not a natural fact. This means that it’s impossible for code to be clear by itself. If the intention is not specified somewhere in human terms, our understanding of the code must always be incomplete.

This is one of the naiveties of cryptocurrency “code is law” advocates, as well as anyone who believes that automated systems can replace human institutions: it works only if the code is a perfect expression of human intention, and given that the universe of human intention is much vaster than what can be computed via Turing machine, the code’s meaning will always be incomplete. The Mango hack, currently being tested in court, is an illustrative example.

For instance, this paper abstract seems almost touching in its innocence:

Blockchain technology comes with many newfound opportunities of turning law into code. By transposing legal or contractual provisions into a blockchain-based “smart contract” with a guarantee of execution, these rules are automatically enforced by the underlying blockchain network and will, therefore, always execute as planned, regardless of the will of the parties. This, of course, generates new problems related to the fact that no single party can affect the execution of that code. With the widespread adoption of Machine Learning, it is possible to circumvent some of the limitations of regulation by code. ML allows for the introduction of code-based rules which are inherently dynamic and adaptive, replicating some of the characteristics of traditional legal rules characterized by the flexibility and ambiguity of natural language.

It seems obvious to me on the face of it that the authors have misidentified the primary problem with “code is law” and that Machine Learning will not circumvent this problem but rather exacerbate it. And, I shake my head at people holding up the code-generating abilities of LLMs as proof of their intelligence, trading on the idea that someone who writes code must be smart: programming languages are far easier to learn than human ones, and the probability space for a “good” answer is much smaller and more repeatable. As proof of reasoning ability, it’s pretty weak, certainly weaker than the ability to write a college essay.

I think it would be interesting to apply literary theory to code, especially in the wake of LLM tools that can simulate, but not possess, understanding of human intention. All the post-structuralists are jumping on ChatGPT, of course, but I’d be more interested in studying the code itself than its human-imitating outputs. I am certainly not the first person to have this thought. Some googling led me to the dormant Substack Critical Code Theory, and I like the sound of its project; I wish it had lasted beyond the first essay.

Now, at work, I insist on writing beautifully-commented code. If someone asks me to remove a comment, with the reasoning that an engineer should be able to study the usages and figure it out themselves, I reply that this process is time-consuming and error-prone compared to the effort of reading a single sentence.

I suppose one argument for comments would be in the adage about how if you can’t explain how something works, then you probably don’t understand it yourself. I suppose a lot of my commenting was like that: notes to the future about anything that seemed non-obvious, and also proof of sorts that I knew how the code worked.

I used to code scientific models, meaning I was using some textbook or paper’s logic and equations on how to calculate something. I felt strongly that those sources needed to be documented in the code, and also any place where I took a shortcut compared to how the equations, for example, were laid out in the text.

With the Apollo code, the first file I opened at random was GIMBAL_LOCK_AVOIDANCE.agc (Command Module version). I don’t know what this is, but I can see in the code that they’ve documented shortcuts, for example the constants that were pre-calculated, probably to avoid using a software-based math function (nowadays, the performance advantage, say, of pre-calculating a cosine, would probably be negligible with built-in floating-point co-processors).

Those are GOOD comments! What they didn’t comment, though, is the physics of this gimbal thing, and why and how they’re calculating it. But I suppose in the late 60s, the people reviewing this code would be aero engineers, who could be assumed to know that.

Interesting that the Lunar Module version of this file is shorter but more extensively commented. Fancy that.

Thank you for writing this essay - I can't get over the criticism you describe. Criticism of what I expect are excellent and helpful comments!

I've been of two minds on commenting. In college, our profs taught us to comment and graded us on it. I did well enough. The goal was a thoughtful comment, one helpful to a future reader. From there on, most of my coding was embedded in systems and written in assembly. I necessarily added a lot of comments. I know I'm just restating the obvious.

My second mind emerged when I re-read my code about five years later. It was long enough for me to have learned a lot more about systems design and also long enough for me to have forgotten the specifics of the code I was reviewing. I found most of my comments unhelpful in returning the details, and some comments later proved wrong because I'd revised the underlying code and failed to either delete an erroneous comment or revise my comment. By that time, I'd also read code written by others. About half the time, comments were distracting, and only occasionally were they enlightening.

This set of experiences led me to a terrible place. One where I'd write the fewest possible comments to preserve implementation details, with the observation that the code itself was decent documentation. At this point, I was writing and scripting in Sed, Awk, TCL, C, Matlab, and Verilog, one of which is strongly typed. Well-written headers with meaningful hierarchy and careful naming were enough to keep me from forgetting what I was doing. Since most of these fragments were to automate my analog design work, I considered them all throw-away.

I'm not proud of that stage in my coding life. I did enter into the arrogant camp. No one directly suffered. My projects died with me, or in one case, patented with the code disclosed, a requirement for the preferred embodiment, preserving my crappy comments for the foreseeable future.

What never occurred to me was that my inability to write in English was the underlying problem. I believed this: if I bothered to write something down and wrote the absolute minimum wording to seem clear, I'd have effectively captured whatever I had in mind to preserve. Nothing could have been further from the truth.

An aside - Once upon a time, there was a computer peripheral definition with a committee standard. It was a detailed standard that defined the hardware interface and the command behavior. A design team very carefully implemented the standard in their product. But, when they tried to connect their device to the target system, they discovered a problem. It seemed that some commands were not executing. They found a bug in the software driver. It was a bug that depended on an implementation error for a feature in the peripheral. Eventually, the software vendor admitted to the bug, and the standards committee recognized the portions of the spec that led some vendors astray.

Since the software vendor was the "biggest dog" in the room, the design team ended up changing the hardware to allow the bug in the software to remain! The point is that multiple companies read the original spec, along with the software vendor, and many of them erred in their understanding. It is a difficult task to write things clearly.

I agree with you strongly. The overarching coding skill is learning to write well in a human language. We could argue code is law. We could teach ourselves to express our desires in a restricted language, but why? Use the restricted language for restricted tasks. And then use our native languages for what they're good for, explaining the operation of and need for the restricted task.